Samsungs’ New AI Technology Can Make A Video Out Of A Still Picture

LAHORE MIRROR (Monitoring Desk)–With the rising popularity of deepfakes, Samsung has seemingly jumped on the bandwagon with their research to allow a neutral network to turn a still image into a convincing video of the person talking.

According to Motherboard, researchers at the Samsung AI center in Moscow have achieved this by training a “deep convolutional network” through the process of showing it various videos of talking heads, to allow it to identify and retain the knowledge of certain facial features, then using that data to animate a still image.

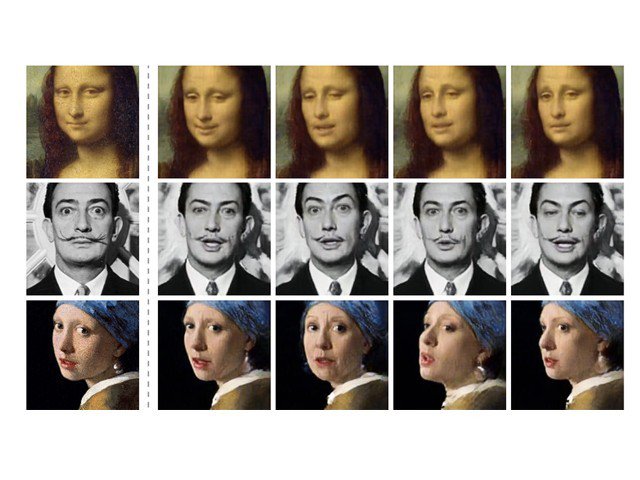

The results were depicted in a paper titled “Few-Shot Adversarial Learning of Realistic Neural Talking Head Models.” While not being as convincing as some of the deepfake videos available online, Samsung’s approach is about turning a single image into a video, whereas other deepfake videos require a larger number of images of the person you are attempting to imitate.

Using a single still image of Fyodor Dostoevsky, Salvador Dali, Albert Einstein, Marilyn Monroe and even Mona Lisa, the AI was able to create videos of them talking which are momentarily convincing enough to be perceived as actual footage. While the videos will definitely not fool an expert or someone closely examining them, they have the potential to improve drastically in a matter of years like other AI-based generated imagery.

The implications of this research are frightening. This technology would allow anyone to fabricate a video of someone else talking using simply a picture of them. Along with a tool that can imitate voices through using snippets of sample audio material, one can get anyone to “say” anything too. With the addition of tools like Nvidia’s GAN to the mix, a convincing yet fake setting could also be created for such a video. As this technology becomes increasingly accurate and accessible, discerning which videos are real and which are fabricated will become increasingly difficult. Hopefully, the tools needed to differentiate the two will become more advanced also.